Top 5 Web Scraping Use Cases for Automation, Machine Learning, and Business Insights

Ethan Collins

Pattern Recognition Specialist

17-Oct-2025

In the wake of the digital revolution, data has become an enterprise's most valuable asset. Web Scraping, the key technology for efficiently acquiring massive amounts of public network information, is increasingly becoming the cornerstone for driving business automation, empowering machine learning models, and deepening commercial insights. It is no longer just a technical tool but a critical strategic capability for businesses to gain a competitive edge and enable real-time decision-making.

This article will delve into the five core applications of web scraping across the three strategic domains of "Automation," "Machine Learning," and "Business Insights." We will provide unique insights and practical implementation advice to help enterprises surpass their competitors and build a high-value, data-driven business.

I. Web Scraping: A Leap from Technology to Strategy

Traditional market research and data collection methods are often time-consuming, costly, and lack real-time capabilities. Web scraping, by using automated programs (crawlers) to simulate human browsing behavior and extract structured data from web pages, significantly enhances the efficiency and scale of data acquisition.

Three Strategic Values of Web Scraping:

- Automation: Delegate repetitive, time-consuming data collection tasks to machines, freeing up human resources to focus on analysis and decision-making.

- Machine Learning: Provide large-scale, high-quality, and customized training datasets for complex AI models—the lifeblood of model performance.

- Business Insights: Offer a real-time, comprehensive panorama of the market, supporting dynamic pricing, competitive analysis, and trend forecasting.

II. In-Depth Analysis of Five Core Application Scenarios

We will focus on the five most impactful application scenarios, which are not only common industry practices but also key to achieving differentiated competition.

1. Empowering Machine Learning Models: The "Data Pipeline" for High-Quality Training Data

In the age of Artificial Intelligence, the truth that "data determines the upper limit of a model" is widely accepted. Web scraping is the most effective method for building high-quality, customized training datasets.

| Challenge | Web Scraping Solution | Unique Value and Insight |

|---|---|---|

| Public datasets are outdated or irrelevant | Real-time scraping of domain-specific data ensures data freshness and relevance. | Customized Label Generation: By scraping specific website reviews, tags, or classification information, more granular labels can be automatically generated for the data, far exceeding the granularity of general datasets. |

| Insufficient data volume | Scalable scraping of text, images, video metadata, etc., to quickly build million-level datasets. | Multimodal Data Fusion: Scraping not just text, but also associated image descriptions and user interaction data, to train more complex cross-modal AI models. |

| Data bias | Scraping data from multiple, different sources for cross-validation and balancing to reduce data bias from a single source. | Data Drift Monitoring: Continuously scrape data and compare it with the model's training data to timely detect changes in data distribution (data drift), guiding model retraining. |

【Practical Advice】: When scraping data for ML models, the data cleaning and structuring process should be considered a core component of the scraping pipeline, ensuring uniformity of data format and accuracy of labels.

2. Real-Time Competitive Price Monitoring and Dynamic Pricing Strategy

In the e-commerce and retail sectors, price is the most direct factor influencing consumer purchasing decisions. Web scraping enables millisecond-level monitoring of competitors' prices, inventory, and promotional activities, thereby supporting Dynamic Pricing strategies.

By continuously scraping the SKU (Stock Keeping Unit) prices, discount information, and inventory status of major competitors, enterprises can feed this data into their pricing algorithms. Machine learning models can then adjust the product prices in real-time based on demand elasticity, competitor movements, and historical sales data to maximize profit or market share.

【Differentiated Value】: Beyond just price, scraping "Price Change History" and "Bundle Sales Strategies" provides deeper insights. For example, analyzing the magnitude of a competitor's price adjustments during specific holidays can predict their future marketing behavior.

3. Market Sentiment Analysis and Brand Reputation Management

Social media, forums, news websites, and e-commerce review sections contain a vast amount of consumer sentiment data. By scraping this unstructured text data and combining it with Natural Language Processing (NLP) technology, enterprises can perform large-scale Sentiment Analysis.

- Business Insights: Instantly understand market feedback after a new product launch, quickly identifying product defects or service pain points.

- Automation: Automatically identify negative comments and crisis signals, triggering an early warning system for automated brand reputation management.

【Unique Insight】: The granularity of sentiment analysis should be refined from the "product" level to the "product feature" level. For instance, when scraping reviews for a mobile phone, analyze the sentiment not only for the product as a whole but also for specific keywords like "battery life" and "camera performance" to guide product improvement.

4. Automated Lead Generation and Market Expansion

For B2B enterprises, finding potential customers and market partners is key to sustained growth. Web scraping can automate this tedious process.

By scraping data from industry directories, corporate listings, job boards, and professional social platforms , a target customer database can be built, including company names, contacts, job titles, technology stacks, and company size.

【Practical Advice】: Combining this with the CAPTCHA solutions mentioned in the internal hyperlinks can more effectively counter the anti-scraping mechanisms of target websites, ensuring the continuity and accuracy of lead data. For example, using a tool like CapSolver to solve complex AWS WAF or reCAPTCHA challenges ensures the automated scraping process remains uninterrupted.

Further Reading: Solving complex CAPTCHA challenges is a critical step in acquiring high-quality sales leads. Learn more about the info of solving AWS WAF CAPTCHA and reCAPTCHA v2/v3.

5. Financial Market Intelligence and Risk Prediction

The financial industry demands extremely high standards for data real-time capability and accuracy. Web scraping plays an irreplaceable role in financial intelligence, algorithmic trading, and risk management.

- Business Insights: Scraping real-time reports from news agencies, regulatory announcements, and financial discussions on social media to build event-driven trading strategies.

- Machine Learning: Training models to identify sentiment indicators and uncertainty indices within news texts to predict short-term fluctuations in stock prices.

【Differentiated Value】: Beyond scraping traditional financial data, scraping supply chain data (such as public information on shipping tracking and factory production status) can provide early macroeconomic signals for investment decisions—a unique advantage that traditional financial data sources often lack.

III. Web Scraping Technology Selection Comparison: Efficiency vs. Anti-Bot Measures

Choosing the right technology stack is crucial when implementing a web scraping project. Below is a comparison of several mainstream scraping methods in terms of efficiency, anti-bot capability, and cost:

| Feature | Self-Built Crawler (e.g., Python/Scrapy) | Commercial Scraping Service (e.g., Scraping API) | Headless Browser (e.g., Puppeteer/Playwright) |

|---|---|---|---|

| Development Cost | High (Requires handling all details) | Low (API call, quick integration) | Medium (Requires handling browser environment and resource consumption) |

| Scraping Efficiency | Extremely High (Optimized for specific targets) | High (Provider manages maintenance) | Lower (High resource consumption, slower speed) |

| Anti-Bot Capability | High (Customizable anti-bot strategies) | Extremely High (Professional team manages proxy pool and fingerprinting) | Medium (Simulates real browser behavior) |

| Maintenance Difficulty | Extremely High (Frequent updates needed for website structure changes) | Low (Provider manages maintenance) | Medium (Browser updates and environment configuration) |

| Best Use Case | Long-term, large-scale, highly customized projects | Fast, stable, high-concurrency commercial data needs | Scenarios requiring complex JavaScript execution or login |

【Unique Insight】: For commercial applications demanding high efficiency and strong anti-bot capabilities, a Commercial Scraping Service is often the more cost-effective choice, as it outsources the complex work of proxy management and anti-bot maintenance to a specialized team.

IV. Challenges and Countermeasures in Implementing Web Scraping

While web scraping holds immense potential, its practical operation still faces numerous challenges, especially in scenarios involving large-scale and high-frequency data collection.

Challenge 1: Escalation of Anti-Bot Mechanisms

Website anti-bot mechanisms are becoming increasingly sophisticated, ranging from simple IP blocking to complex behavioral analysis, TLS fingerprinting, and CAPTCHA challenges.

Countermeasures:

- Use High-Quality Proxy Services: Combine residential or datacenter proxies to rotate IPs and avoid being blocked.

- Simulate Real User Behavior: Use headless browsers to simulate mouse movements, scrolling, and clicks, and modify parameters like User-Agent and Headers to impersonate a regular user.

- Integrate CAPTCHA Solutions: For challenges like reCAPTCHA,clouflare , or AWS WAF CAPTCHA, integrate professional third-party CAPTCHA solving services (like CapSolver) to achieve automated bypass.

Challenge 2: Legal and Ethical Boundaries

Data scraping must comply with laws, regulations, and the website's Terms of Service.

Countermeasures:

- Scrape Public Data Only: Strictly avoid scraping private personal data or data that requires login access.

- Adhere to the Robots.txt Protocol: Check the target website's

robots.txtfile before scraping and respect the owner's scraping restrictions. - Control Scraping Frequency: Set reasonable request intervals to avoid putting excessive load on the target website's servers.

V. Conclusion and Outlook

Web scraping is an indispensable part of a modern enterprise's data-driven strategy. By applying it to core areas such as AI training data generation, dynamic pricing, market sentiment analysis, automated lead generation, and financial intelligence, businesses can gain real-time, precise commercial insights and maintain a competitive edge.

A successful web scraping strategy lies not only in technological advancement but also in adherence to legal regulations, respect for data ethics, and continuous adaptation to anti-bot challenges. With the ongoing development of AI technology, future web scraping will be more intelligent and adaptable, bringing unprecedented depth and breadth to business decision-making.

Appendix: Frequently Asked Questions (FAQ)

Q1: Is web scraping legal?

A1: The legality of web scraping depends on the specific content and method of scraping. Generally, scraping publicly accessible data (non-login, non-private information) is legal. However, you must strictly adhere to the target website's robots.txt protocol and Terms of Service. Scraping copyrighted content or private personal data is illegal. It is advisable to consult with legal professionals and always conduct data collection in a responsible and ethical manner.

Q2: Can scraped data be used directly for Machine Learning models?

A2: Generally, no. Raw scraped data often contains significant noise, missing values, inconsistent formats, and other issues. Before being used for Machine Learning models, it must undergo rigorous pre-processing steps such as Data Cleaning, Data Transformation, and Feature Engineering to ensure data quality and model accuracy.

Q3: What is the difference between web scraping and API calls?

A3: An API (Application Programming Interface) is an official interface proactively provided by a website or service for obtaining structured data; it is stable, efficient, and legal. Web scraping extracts data from the HTML content of a website and is used when an API is not provided or its functionality is limited. Whenever possible, prioritize using the API; only consider web scraping when the API is unavailable or insufficient for your needs.

Q4: How does CapSolver help with CAPTCHA issues in web scraping?

A4: CapSolver is a professional automated CAPTCHA solving service. It utilizes advanced AI and Machine Learning technology to automatically recognize and solve various complex CAPTCHA types, such as reCAPTCHA v2/v3, Cloudflare, and AWS WAF CAPTCHA and more. By integrating the CapSolver API into your scraping workflow, you can achieve uninterrupted automated data collection**, effectively solving the CAPTCHA obstacles in anti-bot mechanisms.

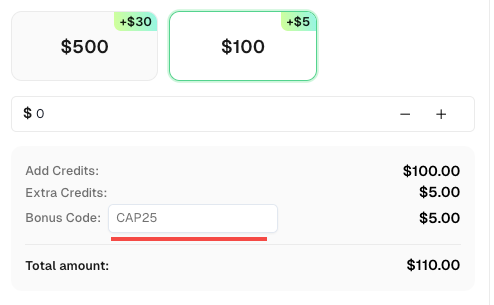

Redeem Your CapSolver Bonus Code

Don’t miss the chance to further optimize your operations! Use the bonus code CAP25 when topping up your CapSolver account and receive an extra 5% bonus on each recharge, with no limits. Visit the CapSolver Dashboard to redeem your bonus now!

Q6: How can I ensure my web scraping is sustainable (i.e., won't break due to website structure changes)?

A6: Website structure changes are one of the biggest challenges for scraping. Countermeasures include:

- Use a combination of CSS selectors or XPath: Do not rely on a single, overly specific selector.

- Establish a monitoring and alert system: Regularly check the scraping status of key data points and immediately raise an alert if scraping fails.

- Use AI-driven scraping tools: Some advanced tools (like the Prompt-Based Scrapers mentioned in the internal link) can use AI to adapt to minor website structure changes, reducing maintenance costs.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

3 Ways to Solve CAPTCHA While Scraping

Learn the top 3 ways to solve CAPTCHA while web scraping — from using CAPTCHA solving APIs and rotating proxies to web scraping APIs.

Lucas Mitchell

23-Oct-2025

The 3 Best Programming Languages for Web Scraping

Discover the top 3 programming languages for web scraping — Python, JavaScript, and Ruby. Learn their pros, libraries (BeautifulSoup, Scrapy, Puppeteer, Nokogiri)

Lucas Mitchell

19-Oct-2025

Top 5 Web Scraping Use Cases for Automation, Machine Learning, and Business Insights

Find out the top web scraping use cases for business automation, lead generation, e-commerce price monitoring, competitor analysis, and machine learning.

Ethan Collins

17-Oct-2025

What Is Data Harvesting: Latest News on Web Scraping in 2024

Learn everything about data harvesting — from web scraping methods and real-world applications to overcoming CAPTCHA barriers using CapSolver. Discover how to collect, clean, and analyze valuable data from websites, documents, and datasets efficiently.

Rajinder Singh

15-Mar-2024